1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

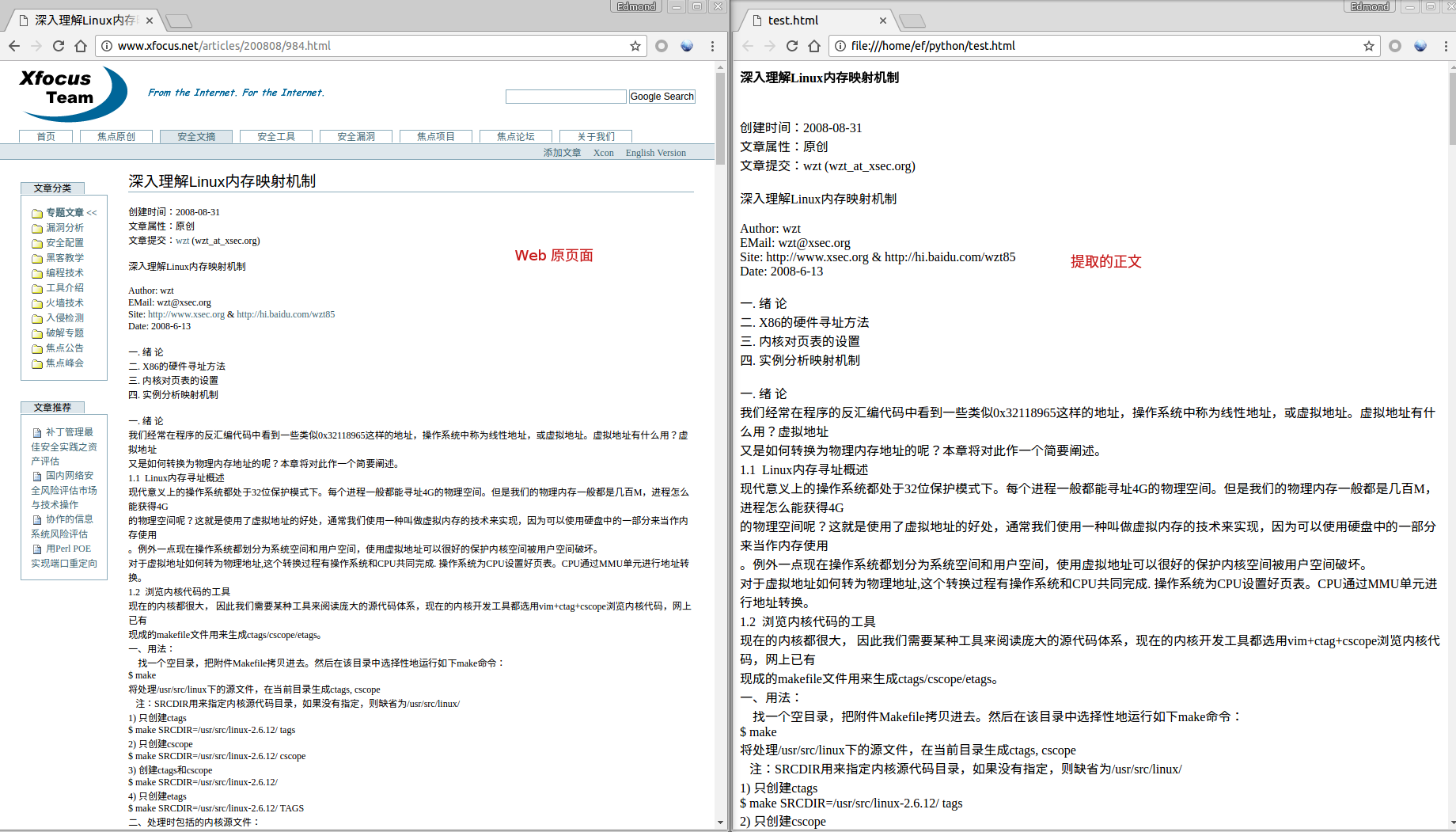

| #encoding=utf-8

import jieba

from bs4 import BeautifulSoup

import re

import requests

#虚词列表

excludeWords = ()

with open('excludeWords','r+') as f:

excludeWords=f.read()

f.close()

excludeWords = eval(excludeWords)

class domNode:

parentNode=None

currNode=None

innerText=None

posi=0

def __init__(self,parentNode,currNode,innerText,posi):

self.parentNode=parentNode

self.currNode=currNode

self.innerText=innerText

self.posi=posi

def levenshtein(a,b):

"相似度计算."

n, m = len(a), len(b)

if n > m:

# Make sure n <= m, to use O(min(n,m)) space

a,b = b,a

n,m = m,n

current = range(n+1)

for i in range(1,m+1):

previous, current = current, [i]+[0]*n

for j in range(1,n+1):

add, delete = previous[j]+1, current[j-1]+1

change = previous[j-1]

if a[j-1] != b[i-1]:

change = change + 1

current[j] = min(add, delete, change)

return current[n]

def filter_words(sourList,filterList):

dest=[]

if type(sourList)!="<type 'set'>":

dest=list(sourList)

else:

dest=sourList

if filterList==None or len(filterList)==0:

return dest

dest = [ i for i in dest if not(i in filterList) ]

return dest

def getContextByNode(htmlSoup,titleKeyWordList,filterList):

sentenceList=jieba.cut(htmlSoup.string)

sentenceList=filter_words(sentenceList,excludeWords)

sentenceList=filter_words(sentenceList,filterList)

if levenshtein(sentenceList,titleKeyWordList)>=len(sentenceList):

return None

return sentenceList

def getHTMLContext(html,filterList):

"获取html的正文内容,不包含tag标签"

if html==None:

return ""

if len(html)==0:

return ""

encodeHtml=encodeSpecialTag(html)

htmlSoup=BeautifulSoup(encodeHtml)

#去除script和style内容

if htmlSoup.script!=None:

htmlSoup.script.replaceWith("")

if htmlSoup.style!=None:

htmlSoup.style.replaceWith("")

if htmlSoup==None or htmlSoup.html==None or htmlSoup.html.head==None or htmlSoup.html.head.title==None:

return ""

#提取标题

title=htmlSoup.html.head.title.string

#print(title)

if title==None:

return ""

titleKeyWordList=jieba.cut(title)

titleKeyWordList = list(filter(lambda w:w.strip(),titleKeyWordList))

titleKeyWordList=filter_words(titleKeyWordList,excludeWords)

titleKeyWordList=filter_words(titleKeyWordList,filterList)

#print(titleKeyWordList)

# 实词是否为空

if len(titleKeyWordList)==0:

return ""

markWindowsList=getMarkWindowsList(htmlSoup.html.body)

#print(type(markWindowsList))

#print(markWindowsList)

markWindowsList = sorted(markWindowsList,key=cmp_to_key(lambda x,y: cmp(x.posi, y.posi)))

#print(titleKeyWordList)

context=""

for item in markWindowsList:

innerText=item.innerText

markWindowKeyWordList=jieba.cut(innerText)

markWindowKeyWordList=filter_words(markWindowKeyWordList,excludeWords)

markWindowKeyWordList=filter_words(markWindowKeyWordList,filterList)

kl=len(markWindowKeyWordList)

if kl==0:

continue

l=levenshtein(titleKeyWordList,markWindowKeyWordList)

#print(markWindowsList,l)

if l<kl:

context+=innerText.strip()+'.\n'

return decodeSpecialTag(context)

def cmp(x,y):

return x-y

def cmp_to_key(mycmp):

'Convert a cmp= function into a key= function'

class K(object):

def __init__(self, obj, *args):

self.obj = obj

def __lt__(self, other):

return mycmp(self.obj, other.obj) < 0

def __gt__(self, other):

return mycmp(self.obj, other.obj) > 0

def __eq__(self, other):

return mycmp(self.obj, other.obj) == 0

def __le__(self, other):

return mycmp(self.obj, other.obj) <= 0

def __ge__(self, other):

return mycmp(self.obj, other.obj) >= 0

def __ne__(self, other):

return mycmp(self.obj, other.obj) != 0

return K

def repl(m):

contents = m.group(3)

if contents == '</p>':

return '[[p]]'

return contents

def encodeSpecialTag(html):

"这里的规则有助于正文内容的识别,还可以保留部分标签,如下面就保留了P,br等标签"

comment=re.compile(r"<!--[.\s\S]*?-->")

html=comment.sub('', html)

#h tag

hPre=re.compile(r"<[\t ]*?[hH][1-6][^<>]*>")

html=hPre.sub('[[h4]]', html)

hAfter=re.compile(r"<[\t ]*?/[hH][1-6][^<>]*>")

html=hAfter.sub('[[/h4]]', html)

br=re.compile(r"<[ \t]*?br[^<>]*>", re.IGNORECASE)

html=br.sub('[[br /]]', html)

hr=re.compile(r"<[ \t]*?hr[^<>]*>", re.IGNORECASE)

html=hr.sub('[[hr /]]', html)

strongPre=re.compile(r"<[\t ]*?strong[^<>]*>", re.IGNORECASE)

html=strongPre.sub('[[strong]]', html)

strongAfter=re.compile(r"<[\t ]*?/strong[^<>]*>", re.IGNORECASE)

html=strongAfter.sub('[[/strong]]', html)

# labelPre=re.compile(r"<[\t ]*?label[^<>]*>", re.IGNORECASE)

# html=labelPre.sub('[[label]]', html)

# labelAfter=re.compile(r"<[\t ]*?/label[^<>]*>", re.IGNORECASE)

# html=labelAfter.sub('[[/label]]', html)

#

# spanPre=re.compile(r"<[\t ]*?span[^<>]*>", re.IGNORECASE)

# html=spanPre.sub('[[span]]', html)

# spanAfter=re.compile(r"<[\t ]*?/span[^<>]*>", re.IGNORECASE)

# html=spanAfter.sub('[[/span]]', html)

pPre=re.compile(r"<[\t ]*?/p[^<>]*>[^<>]*<[\t ]*?p[^<>]*>", re.IGNORECASE)

html=pPre.sub('[[/p]][[p]]', html)

pAfter=re.compile(r"<[\t ]*?p[^<>]*>(?=[^(\[\[)]*\[\[/p\]\]\[\[p\]\])", re.IGNORECASE)

html=pAfter.sub('[[p]]', html)

#

# pAfter=re.compile(r"(\[\[p\]\].*>)([^<>]*)(</p>)", re.IGNORECASE)

# html=pAfter.sub(repl,html)

aPre=re.compile(r"<[\t ]*?a[^<>]*>", re.IGNORECASE)

html=aPre.sub('', html)

aAfter=re.compile(r"<[\t ]*?/a[^<>]*>", re.IGNORECASE)

html=aAfter.sub('', html)

# chardet.detect(html)['encoding']

js=re.compile(r"<(script)[\w\s\S.\u4e00-\u9fa5\uac00-\ud7ff\u30a0-\u30ff\u3040-\u309f]*?</\1>(?s)", re.IGNORECASE)

html=js.sub('', html)

css=re.compile(r"<(style)[\w\s\S.\u4e00-\u9fa5\uac00-\ud7ff\u30a0-\u30ff\u3040-\u309f]*?</\1>(?s)", re.IGNORECASE)

html=css.sub('', html)

return html

def decodeSpecialTag(html):

html=html.replace("[[strong]]","<strong>")

html=html.replace("[[/strong]]","</strong>")

html=html.replace("[[hr /]]","<hr />")

html=html.replace("[[br /]]","<br />")

html=html.replace("[[h4]]","<h4>")

html=html.replace("[[/h4]]","</h4>")

html=html.replace("[[label]]","<label>")

html=html.replace("[[/label]]","</label>")

html=html.replace("[[span]]","<span>")

html=html.replace("[[/span]]","</span>")

html=html.replace("[[p]]","<p>")

html=html.replace("[[/p]]","</p>")

return html

def getMarkWindowsList(bodySoup):

#过滤a标签

nodeQueue=[]

innerTextNodeList=[]

nodeQueue.append(bodySoup)

i=0

while len(nodeQueue)>0:

currNode=nodeQueue[0]

del nodeQueue[0]

for childNode in currNode:

if childNode.string!=None:

innerText=childNode.string

innerText=innerText.replace('\r\n','').replace('\r','').replace('\n','')

tmp=innerText.replace('\t','').replace(' ',' ').replace(' ',' ')

tmpInt=len(tmp.replace(" ",""))

if tmpInt==0:continue

if len(tmp.split(' '))<=2 and len(innerText)-tmpInt>tmpInt:continue

if len(tmp)>1 and innerText.find("©")==-1 and innerText.find("©")==-1 :

dn=domNode(childNode.parent,childNode,innerText,i)

innerTextNodeList.append(dn)

i+=1

# print "i",

# print i,

# print "=",

# print childNode

else:

#这里的规则可能有助于垃圾信息的排除

if childNode.name!=None and childNode.name=='style':continue

if childNode.name!=None and childNode.name=='script':continue

if childNode.name!=None and childNode.name=="a" and len(childNode.text)<=2:continue

if childNode.name!=None and childNode.parent.name!=None and childNode.name=="span" and ( childNode.parent.name=="li" )and len(childNode.text)<=3:

continue

nodeQueue.append(childNode)

return innerTextNodeList

#soup=BeautifulSoup(html)

def test():

headers={

'User-Agent':'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36'

}

c=requests.get('http://www.xfocus.net/articles/200808/984.html',headers=headers)

content=c.content.decode('gbk')

#content=c.text

# #print(content)

print(getHTMLContext(content,['']))

if __name__ == '__main__':

test()

|